Installeer de app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Opmerking: This feature may not be available in some browsers.

Je gebruikt een verouderde webbrowser. Het kan mogelijk deze of andere websites niet correct weergeven.

Het is raadzaam om je webbrowser te upgraden of een browser zoals Microsoft Edge of Google Chrome te gebruiken.

Het is raadzaam om je webbrowser te upgraden of een browser zoals Microsoft Edge of Google Chrome te gebruiken.

Red de schapen!

- Onderwerp starter ssrdam

- Startdatum

Miggie

Well-known member

survival of the non-retarded

Schijnt in een Jumbo te zijn gebeird, maar lijkt op een eenmansactie:En nog één:

Bekijk bijlage 9767

Mike

Lievergezond

Well-known member

Cassandra syndrome: 9 Reasons warnings go unheeded - PsychMechanics

Cassandra syndrome or Cassandra complex is when a person’s warning goes unheeded. The term is derived from Greek mythology. Cassandra was a beautiful

Cassandra syndrome: 9 Reasons warnings go unheeded

October 13, 2021 by Hanan Parvez

Cassandra syndrome or Cassandra complex is when a person’s warning goes unheeded. The term is derived from Greek mythology.

Cassandra was a beautiful woman whose beauty seduced Apollo into granting her the gift of prophecy. However, when Cassandra refused Apollo’s romantic advances, he placed a curse on her. The curse was that nobody would believe her prophecies.

Hence, Cassandra was condemned to a life of knowing future dangers, yet being unable to do much about them.

Real-life Cassandras exist, too. These are people with foresight- people who can see things in seed. They’re able to see the trend of where things are headed.

Yet, these geniuses who can project their minds into the future are often ignored and not taken seriously. In this article, we explore why that happens and how to remedy it.

Why warnings are not heeded

Several human tendencies and biases contribute to not taking warnings seriously. Let’s look at them one by one.

1. Resistance to change

Humans are excellent at resisting change. This tendency is deep-rooted in us. From an evolutionary perspective, it’s what helped us conserve calories and enabled us to survive for millennia.

Resistance to change is why people give up early on new projects, why they can’t stick to their newly formulated plans, and why they don’t take warnings seriously.

What’s worse is that those who warn, those who try to ruffle the status quo or ‘rock the boat’ are viewed negatively.

No one wants to be viewed negatively. So those who warn are not only up against the natural human resistance to change, but they also risk disrepute.

2. Resistance to new information

Confirmation bias lets people see new information in the light of what they already believe. They selectively interpret information to fit their own worldview. This is true not only on the individual level but also on the group or organizational level.1

There’s also a tendency in groups for groupthink, i.e. disregarding beliefs and views that go against what the group believes.

3. Optimism bias

People like to believe that the future will be rosy, all rainbows and sunshine. While it gives them hope, it also blinds them to potential risks and dangers. It’s much wiser to see what can go wrong and putting preparations and systems in place to deal with the potential not-so-rosy future.

When someone gives a warning, starry-eyed optimists often label them as ‘negative thinker’ or ‘alarmist’. They’re like:

“Yeah, but that can never happen to us.”

Anything can happen to anybody.

4. Lack of urgency

How willing people are to take a warning seriously depends to some extent on the urgency of the warning. If the warned event is likely to happen in the distant future, the warning may not be taken non-seriously.

It’s the “We’ll see when that happens” attitude.

Thing is, ‘when that happens’, it might be too late to ‘see’.

It’s always better to prepare for future dangers as soon as possible. The thing might happen sooner than predicted.

5. Low probability of the warned event

A crisis is defined as a low-probability, high-impact event. The warned event or potential crisis being highly improbable is a big reason why it’s ignored.

You warn people about something dangerous that might happen, despite its low probability, and they’re like:

“Come on! What are the odds of that ever happening?”

Just because it’s never happened before or has low odds of happening doesn’t mean it can’t happen. A crisis doesn’t care about its prior probability. It only cares about the right conditions. When the right conditions are there, it’ll rear its ugly head.

6. Low authority of the warner

When people have to believe something new or change their previous beliefs, they rely more on authority.2

As a result, who is giving the warning becomes more important than the warning itself. If the person issuing the warning isn’t trusted or high authority, their warning is likely to be dismissed.

Trust is important. We’ve all heard the story of the Boy Who Cried Wolf.

Trust becomes even more important when people are uncertain, when they can’t deal with the overwhelming information, or when the decision to be made is complex.

When our conscious mind can’t make decisions because of uncertainty or complexity, it passes them over to the emotional part of our brain. The emotional part of the brain decides based on short-cuts like:

“Who gave the warning? Can they be trusted?”

“What decisions have others made? Let’s just do what they’re doing.”

While this mode of making decisions can be useful at times, it bypasses our rational faculties. And warnings need to be dealt with as rationally as possible.

Remember that warnings can come from anyone- high or low authority. Dismissing a warning solely based on the authority of the warner can prove to be a mistake.

7. Lack of experience with a similar danger

If someone issues a warning about an event and that event-or something similar to it- has never happened before, the warning can be easily dismissed.

In contrast, if the warning evokes a memory of a similar past crisis, it’s likely to be taken seriously.

This then enables people to do all the preparations beforehand, letting them deal with the tragedy effectively when it strikes.

A chilling example that comes to mind is that of Morgan Stanley. The company had offices in the World Trade Centre (WTC) in New York. When the WTC was attacked in 1993, they realized that something similar could also happen in the future with WTC being such a symbolic structure.

They trained their employees on how to react in case something similar were to happen again. They had proper drills.

When the North Tower of WTC was attacked in 2001, the company had employees in the South Tower. The employees evacuated their offices at the push of a button, as they had been trained. A few minutes later, when all the Morgan Stanley offices were empty, the South tower got hit.

8. Denial

It could be that the warning is ignored simply because it has the potential to evoke anxiety. To avoid feeling anxiety, people deploy the defense mechanism of denial.

9. Vague warnings

How the warning is issued matters, too. You can’t just raise alarms without clearly explaining what it is that you fear will happen. Vague warnings are easily dismissed. We fix that in the next section.

Anatomy of an effective warning

When you’re issuing a warning, you’re making a claim about what’s likely to happen. Like all claims, you need to back up your warning with solid data and evidence.

It’s hard to argue with data. People may not trust you or think of you as low authority, but they will trust the numbers.

Also, find a way to verify your claims. If you can verify what you’re saying objectively, people will set aside their biases and march into action. Data and objective verification remove the human elements and biases from decision-making. They appeal to the rational part of the brain.

The next thing you should do is clearly explain the consequences of heeding or not heeding the warning. This time, you’re appealing to the emotional part of the brain.

People will do what they can to avoid misfortunes or incur heavy costs, but they need to be convinced first that such things can happen.

Showing works better than telling. For example, if your teenaged son insists on riding a motorbike without a helmet, show them pictures of people with head injuries from motorbike accidents.

As Robert Greene said in his book, The 48 Laws of Power, “Demonstrate, do not explicate.”

Clearly explaining the warning and demonstrating the negative consequences of not heeding is, however, only one side of the coin.

The other side is to tell people what can be done to prevent the future disaster. People may take your warning seriously, but if you have no action plan, you may only paralyze them. When you don’t tell them what to do, they’ll probably do nothing.

The flipside of Cassandra syndrome: Seeing warnings where there were none

It’s mostly true that crises don’t happen out of the blue- that they often come with what crisis management scholars call ‘preconditions’. Many a crisis could’ve been avoided if the warnings had been heeded.

At the same time, there’s also this human bias called hindsight bias which says:

“In retrospect, we like to think we knew more at some point in the past than we actually did.”

It’s that “I knew it” bias after a tragedy occurs; believing that the warning was there and you should’ve heeded it.

Sometimes, the warning just isn’t there. You could’ve no way of knowing.

According to the hindsight bias, we overestimate what we knew or the resources we had in the past. Sometimes, there’s simply nothing you could’ve done given your knowledge and resources at that point in time.

It’s tempting to see warnings where there were none because believing that we could’ve averted the crisis gives us a false sense of control. It burdens a person with unnecessary guilt and regret.

Believing that the warning was there when it wasn’t is also a way to blame authorities and decision-makers. For instance, when a tragedy like a terror attack happens, people are often like:

“Were our intelligence agencies sleeping? How come they missed it?”

Well, crises don’t always come with warnings on a platter for us to take heed of. At times, they just sneak up to us and there’s absolutely nothing anybody could’ve done to prevent them.

References

- Choo, C. W. (2008). Organizational disasters: why they happen and how they may be prevented. Management Decision.

- Pilditch, T. D., Madsen, J. K., & Custers, R. (2020). False prophets and Cassandra’s curse: The role of credibility in belief updating. Acta psychologica, 202, 102956.

Laatst bewerkt:

Lievergezond

Well-known member

Lievergezond

Well-known member

Fries Foar Frijhiid

Well-known member

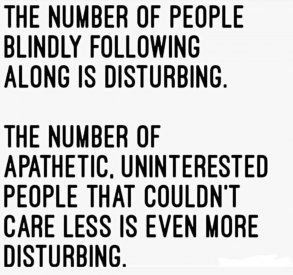

Groep 2 is groter dan groep 1.

Forum statistieken